Artificial Intelligence is no longer confined to cloud servers and enterprise labs. In 2025, consumer-grade GPUs have evolved into powerful AI engines capable of running large language models, generative image tools, and deep learning workflows—all from the comfort of a desktop. Whether you’re a developer, content creator, researcher, or enthusiast, choosing the right GPU can dramatically impact your productivity and creative potential.

This guide explores the best AI-focused consumer GPUs available in 2025, breaking down their capabilities, strengths, and ideal use cases.

The AI Boom Meets Consumer Hardware

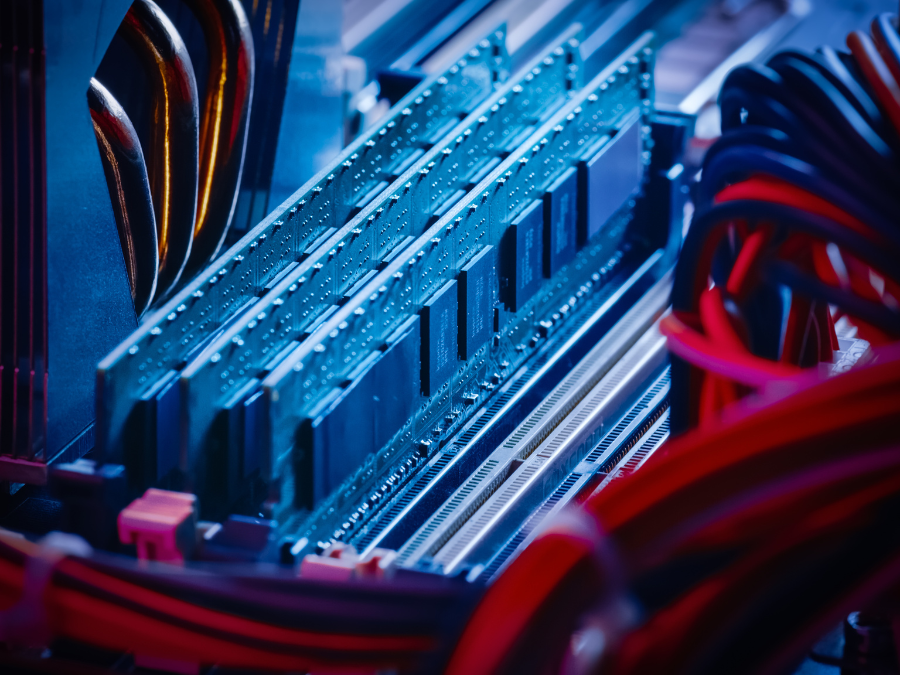

The rise of generative AI, local LLMs, and real-time inference has pushed GPU manufacturers to rethink their designs. NVIDIA, AMD, and Intel have responded with GPUs that feature:

- Specialized tensor cores

- Support for low-precision formats like FP4, FP8, and INT8

- Massive memory bandwidth

- Enhanced cooling and power delivery systems

These features allow users to run models like Stable Diffusion, LLaMA, and Whisper locally, without relying on cloud infrastructure.

Top AI GPUs for Consumers in 2025

1. NVIDIA GeForce RTX 5090

The undisputed leader in consumer AI performance, the RTX 5090 is built on NVIDIA’s Blackwell architecture. It features:

- 32GB GDDR7 memory

- 1.79TB/s bandwidth

- 5th-gen Tensor Cores

- Support for FP4, FP8, INT8, BF16

- Up to 838 TOPS INT8 performance

It outpaces even the 80GB A100 in LLM benchmarks, delivering over 5,800 tokens per second with optimized models. Stable Diffusion runs nearly twice as fast compared to the 4090. However, with a TDP of 575W, it requires robust cooling and a high-end PSU.

Best for: AI developers, researchers, and prosumers running large models locally.

2. NVIDIA GeForce RTX 5080

A more affordable alternative to the 5090, the RTX 5080 retains many of the same AI features:

- 16GB GDDR7 memory

- 960GB/s bandwidth

- 5th-gen Tensor Cores

- 450 TOPS INT8 performance

It performs 10–20% better than the RTX 4080 Super in AI benchmarks and even outpaces the 4090 in certain inference tasks. It’s ideal for users working with models that fit within 16GB VRAM.

Best for: Creators and developers running mid-sized LLMs and image generation models.

3. NVIDIA GeForce RTX 4090

Still a gold standard for many, the RTX 4090 offers:

- 24GB GDDR6X memory

- 1TB/s bandwidth

- 4th-gen Tensor Cores

- 330 FP16 TFLOPS

It supports FP16 and BF16 operations and can handle LLMs with up to 30 billion parameters using 8-bit quantization. Despite newer cards, the 4090 remains a reliable workhorse for AI professionals.

Best for: Mainstream users and researchers needing high compute without the 5090’s power draw.

4. AMD Radeon RX 9070 XT

AMD’s RDNA 4-based RX 9070 XT introduces second-gen AI accelerators:

- 16GB GDDR6 memory

- FP8 support

- Improved ray tracing and AI inference

While it doesn’t match NVIDIA’s tensor performance, it offers solid AI capabilities for image generation and lightweight LLMs.

Best for: Budget-conscious users and AMD loyalists exploring AI workflows.

5. Intel Arc Titan

Intel’s Arc Titan is a surprise contender in the AI space:

- 12GB GDDR6 memory

- FP16 and INT8 support

- Optimized for local inference and edge AI

It’s not built for training large models but excels in running quantized LLMs and AI-enhanced creative tools.

Best for: Beginners and hobbyists experimenting with AI locally.

Key Considerations When Choosing an AI GPU

- Memory Size: Larger models require more VRAM. Aim for 24GB+ if working with LLMs or high-res image generation.

- Tensor Core Support: Essential for accelerating AI workloads. NVIDIA leads here.

- Power and Cooling: High-end GPUs like the 5090 demand serious cooling and PSU capacity.

- Software Ecosystem: NVIDIA’s CUDA and cuDNN libraries offer unmatched compatibility with AI frameworks.

- Budget: Prices range from ₹50,000 to ₹2,00,000+. Choose based on your workload and future needs.

Use Cases for Consumer AI GPUs

- Local LLM Inference: Run models like LLaMA, Mistral, and Phi-3 without cloud latency.

- Generative Art: Use Stable Diffusion, Midjourney-style tools, and GANs for image creation.

- Video Editing: AI-powered denoising, upscaling, and scene detection.

- Voice and Audio AI: Real-time transcription, voice cloning, and enhancement.

- Coding Assistants: Run local code generation models for privacy and speed.

Final Thoughts: AI Power in Your Hands

2025 marks a turning point in consumer AI hardware. With GPUs like the RTX 5090 and 5080, users can now run complex models locally, unlocking new possibilities in creativity, productivity, and research. Whether you’re building apps, generating content, or training models, the right GPU can make all the difference.

As AI continues to evolve, expect even more specialized consumer hardware in the years ahead. But for now, these GPUs represent the best of what’s available—and they’re ready to power your next breakthrough.

FAQ: Best AI GPUs for Consumers in 2025

Q: Which GPU is best for running large language models locally?

A: The NVIDIA RTX 5090 offers unmatched performance for LLMs with its 32GB memory and 838 TOPS INT8 throughput.

Q: Is the RTX 4090 still relevant in 2025?

A: Yes, it remains a reliable option for AI workloads and supports models up to 30B parameters with quantization.

Q: What’s the best budget GPU for AI tasks?

A: Intel Arc Titan and AMD RX 9070 XT offer solid performance for entry-level AI workflows.

Q: Do these GPUs support generative image models like Stable Diffusion?

A: Absolutely. All listed GPUs handle image generation efficiently, with the 5090 offering the fastest results.

Q: How important is VRAM for AI tasks?

A: Very. More VRAM allows for larger batch sizes and model parameters. Aim for 24GB+ for serious AI work.

Q: Can I use these GPUs for gaming too?

A: Yes, they all support high-end gaming alongside AI workloads.